Running an AI model locally on your computer is a good idea if you are sceptical about privacy. And there are quite a lot of AI models that run on regular computers, and you don’t need a powerful or supercomputer to use them. There are plenty of AI models that run offline, ensure your data stays private, and don’t require an API or any additional costs. So, which AI model can run on a regular PC? Here is a list of AI models that can run on a regular PC.

Which AI Model can run on a regular PC?

You can use any of these AI models based on the hardware requirement.

- GPT4All

- LM Studio

- Ollama

- Jan.ai

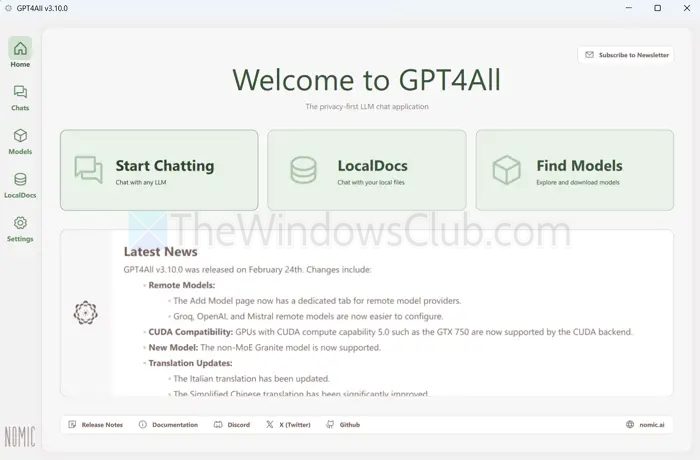

1] GPT4All

GPT4All is developed by Nomic AI, and it features models like LLaMA, MPT, Falcon, and Mistral families. It is a local AI chat model that is designed to be easily installed and run on almost any PC. Plus, it offers multiple models (7B range), optimized for local inference on CPU or GPU.

Key features

- Runs completely offline, ensures privacy.

- Good for general chat, Q&A, and light reasoning.

- Ability to customize your language model.

- Lets you chat with your files privately.

Read: How to build and sell AI-powered apps

Hardware requirements

- CPU: Modern i5/i7 or AMD Ryzen (GPT4All installers require your CPU to have AVX/AVX2 instruction sets.)

- RAM: 8–16 GB

- GPU: Optional (NVIDIA GPU helps but not required)

- Disk: 4–8 GB for model files

- OS: A recent Operating System such as Windows 10 or later, macOS Monterey 12.6 or later, or Ubuntu 22.04 LTS or later

How to install and set up GPT4All?

- Download GPT4All from its official website.

- Install it by following all the on-screen steps.

- Once installed, launch the app and click on Start Chatting.

- It will ask you to Install a Model, so click on the button.

- Next, you will get a list of available models – browse through them and download a model that suits your requirements.

- Once done, you are all set to chat with GPT4All locally.

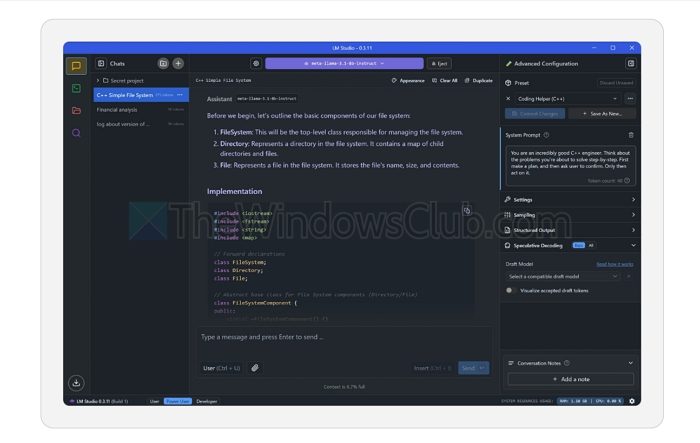

2] LM Studio

LM Studio is a beginner-friendly desktop app. It allows you to browse, download, and chat with dozens of popular models through a clean, modern interface.

Key features

- Run LLMs entirely on your computer.

- Comes with a built-in function to help you search and download a wide range of open-source LLMs.

- Once a model is downloaded, it can be run completely offline.

- Supports chatting with documents.

Read: Free tools to run LLM locally on Windows 11 PC

Hardware requirements

- CPU: A modern multicore CPU with AVX2 instruction set support is required for Windows and Linux.

- RAM: 8 GB

- GPU: 6 GB of VRAM

- Storage: 50 GB

3] Ollama

Ollama is another open-source framework that simplifies the process of running LLMs locally on your computer. It comes with a better GUI that is focused on experience and takes a command-line-first approach, making it particularly appealing to developers and those who prefer scripting and automation.

Key features

- Command-Line Interface (CLI).

- Comes with a growing library of pre-trained, ready-to-use open-source LLMs.

- You can create custom modifiers to define specific behaviours and system prompts.

- Designed to be lightweight, as it runs as a background service.

Hardware requirements

- CPU: A modern multicore CPU with AVX2 instruction set support.

- RAM: 8 GB

- GPU: 8 GB of VRAM (NVIDIA with CUDA recommended for best performance; AMD with ROCm for some models; Apple Silicon Macs are highly optimized for CPU/integrated GPU).

- Storage: 50 GB SSD (for application and at least one small model)

4] Jan.ai

If you are looking for a ChatGPT alternative, Jan.ai can be a great tool. It is an open-source, privacy-focused desktop app that lets you run LLMs locally on your computer.

Key features

- 100% offline operation.

- Prioritises user privacy.

- Let’s you download and run open-source LLMs on your computer.

- Designed to run on a wide range of hardware, including regular computers.

Hardware requirements

- CPU: A modern multicore CPU with AVX2 instruction set support (Intel Core i5 / AMD Ryzen 5 equivalent or newer recommended)

- RAM: 8 GB

- GPU: 8 GB of VRAM (NVIDIA with CUDA recommended for best performance; AMD with ROCm or Vulkan supported; Apple Silicon Macs are highly optimised for CPU/integrated GPU with Metal)

- Storage: 50 GB

So, those were a few AI models that you can run on a regular PC. These are not AI models, but tools that let you download and access open-source LLMs. So go ahead and check them out and see which one fits you best.

Can I use local AI tools without an internet connection?

Yes, once you’ve downloaded the required models, tools like GPT4All, LM Studio, Ollama, and Jan.ai can run completely offline. This means you can chat or process data privately without sending anything over the internet, making them ideal for privacy-conscious users.

Do I need a dedicated GPU to run AI tools smoothly?

No, a GPU is not strictly required for basic use. Most tools are optimized to run on CPUs and support quantized (compressed) models that can function well on regular hardware. However, having a GPU (especially NVIDIA with CUDA support) will significantly improve performance, especially for larger models or more intensive tasks.