At first, we had single core CPUs. These CPUs were clocked at a certain speed and could deliver performance at that particular speed. Then came the age of CPUs with multiple cores. Here, every individual core could deliver its own speed independently. This exponentially increases a CPU’s power, thereby increasing the computing device’s overall performance. But the human tendency is to always look out for even better. Hence, multithreading was introduced, which slightly increased the performance – but then came Hyper-Threading. It was first introduced in 2002 with Intel’s Xeon Processors. With the implementation of hyperthreading, the CPU was always kept busy executing some task.

It was first introduced with Intel’s Xeon chip and then appeared in consumer-based SoCs with the Pentium 4. It is present in Intel’s Itanium, Atom, and Core ‘i ‘ series of processors.

What is HyperThreading in computers?

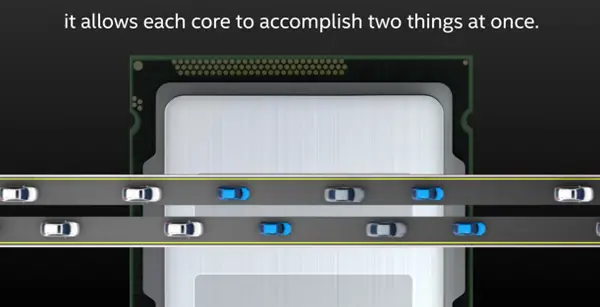

It is like negligible waiting time or latency for the CPU to switch from one task to another. It allows each core to process tasks continuously without any wait time.

With Hyperthreading, Intel aims to reduce the execution time of a particular task for a single core. This means that a single processor core will execute multiple tasks one after the other without any latency. Eventually, this will reduce the time taken for a task to be executed fully.

It directly takes advantage of the superscalar architecture, in which multiple instructions operate on separate data queued for processing by a single core. However, the operating system must also be compatible. This means that the operating system must support SMT or simultaneous multithreading.

Also, according to Intel, if your operating system does not support this functionality, you should just disable hyperthreading.

Some of the advantages of Hyperthreading are-

- Run demanding applications simultaneously while maintaining system responsiveness.

- Keep systems protected, efficient, and manageable while minimizing the impact on productivity.

- Provide headroom for future business growth and new solution capabilities.

In summary, if you have a machine used to pack a box, the machine has to wait after packing one box until it gets another from the same conveyor belt. However, implementing another conveyor belt that serves the machine until the first one fetches another box would boost the packing speed. This is what Hyperthreading enables with your single-core CPU.